https://cryptohaze.com/slides/Cryptohaze%20DC20%20Final%20Slides.pdf

If you're interested in a commentary on the slides, read on!

In the event that you missed my talk at Defcon 20, I'm putting a written version of it up here, along with my relevant presentation slides. I'll link the video when it goes up. This is a summary of what I talked about, and does include more information that was not available at the time of the actual talk.

I've been writing high performance password cracking tools for the past 4 years or so. When I started, the nVidia 8800GTX was the high performing card, the Pentium 4 was still the common chip, and John the Ripper was still the leading password cracking tool. The Playstation 3 had just come out, and while the Cell was an impressive processor at the time, I decided to not pursue it. GPU development tends to iterate faster than gaming consoles. This turns out to be a correct decision, as not only is the PS3 no faster than it was while GPUs are 3 generations beyond, Sony has eliminated the ability to use the PS3 as a general purpose computer in their failed attempt to prevent it from getting cracked open.

There are a few icons that will appear throughout the presentation. Target hashes are the things you are looking for. I'll define "The Cloud" for this talk as "Other people's computers" - machines you can't touch. And, "The Cluster" is machines you can touch.

Now, I'd like to discuss the state of "cloud password cracking." We keep hearing about it, but what is it?

The first thing that usually comes to mind is Amazon's EC2 GPU instances. Depending on who you believe, they are either the end of passwords as we know it, or they're two ancient nVidia Teslas for $2.10/hr. I'll be coming back to that later.

The next "big thing" from 2011 was Thomas Roth's "Cloud Cracking Suite." This, unfortunately, has been talked about at several conferences and is badly missing in action with no news for the past 6 months or so.

Moxie has his cloud cracker service online, now with MSCHAPV2 pwnage added. Unfortunately, for this, you send your hashes to him, and don't have control over them.

Finally, there's the DIY solution, and this is what a lot of people end up with. It's really the only option right now.

For the pentesters working with NDAs, it's pretty clear - you can't just throw the hashes at the internet and see what sticks. They must remain within your data center, your computers, whatever - things under your control.

However, for the people dumping hashes out of other websites, it's not so clear. The story of LinkedIn is relevant here. Based on the data from people who had changed their passwords, the database of hashes was actually dumped 6-8 months before it was "discovered." Nobody knew. Whoever had dumped the hashes out of LinkedIn had gotten away with it! Unfortunately, someone with access to the partially cracked list decided to crowdsource the effort, and posted them to Inside Pro.

There are people out there who have good password practices. There are people who use a totally unique password for each site, and there are people who will use a password "framework" with the site name as part of it. People who used a totally unique password confirmed that, yes, their LinkedIn password was part of the dump. And as things progressed, people started seeing a lot of "linkedin" in the passwords. It was clear this came from LinkedIn, and it was also clear that they had no clue.

If the attackers had kept the hashes private, nobody ever would have discovered that LinkedIn was dumped.

The same is true of eHarmony, Last.FM, and plenty of others. Hash disclosure means the world knows what you've just dumped hashes from.

The Cryptohaze Multiforcer is one such solution to this problem.

It's a fully open source, US based tool, GPLv2. It supports Windows, Linux, and OS X. It probably also builds on FreeBSD with CUDA, though I've not tried (if you care to do so, please let me know!). The new framework supports CUDA, OpenCL, and the host CPU, and more importantly supports these in combinations (we'll come back to this later). It's a C++ framework with CUDA and OpenCL kernels. And, finally, it has integrated network support. This is not a solution that requires external wrappers and scripts like some other closed source products. This is built in, and works at the same level as the native code.

Why does network support matter so much? Well…

When it comes to password cracking, especially brute forcing, enough is never enough. Faster is always better. And eventually, cramming more GPUs into a single system just doesn't work. You hit power limits, you hit driver GPU core limits, or you light your system on fire.

The Multiforcer works around this by supporting as many systems as you want to throw at it.

The process for using the distributed Multiforcer is quite simple - it's the same binary as both the client and the server.

The server instance is fed the target hashes, and is run with the exact same command line switches you use to set up a single system attack. The only flag that is added is "--enableserver" - this turns it into a network box. I do highly suggest using "--serveronly" as well, which means that the server does not attempt to run any device code. GPUs are occasionally glitchy, and drivers aren't much better, so by not running any compute in the server instance, you dramatically reduce the risk of things crashing and killing the job.

The client instance is fed a single argument: "--remoteip=[hostname]" It will idle when the server is not present, quietly trying to connect every few seconds. When it successfully connects, it will work on the provided workload until the server exits or disconnects the client. At this point, the client waits for another server instance to spin up on the specified port.

At some point soon, I will be supporting clients that run as a service and have idle detection - if a user shows up, they will pause until the user goes away from the machine. This allows the use of corporate desktops as remote nodes.

What does this enable? Let's take a look!

This is a run from a few weeks ago. It involved 6 machines, about 30 GPU cores, and an awfully fast attack on NTLM. We hit 154B NTLM/sec, and the code is even faster now than it was for that test. Please notice that this is NOT a single hash run - this is 10 hashes!

Just in case you were wondering about SHA1, I support that too. For this test, we ran 1000 SHA1 hashes, and hit 30B/sec.

This is some of the hardware we used for the tests. It's good fun to play with!

I'd like to shift gears back to EC2. We've heard that EC2 is the end of password cracking as we know it, and we've heard that it's overpriced and slow for what it is. I've gone about doing something silly - getting "numbers" for it.

I'm using my OpenCL code for the CPU, and CUDA for the GPUs, and the top graph shows the speeds. Clearly, the GPU instance is by far the fastest (and also gains a significant boost from the CPU - around 12% of that is the CPU contributing via OpenCL).

However, the GPU instance is also, by far, the most expensive at $2.10/hr. BUT - that is the demand price. EC2 also has what's called "spot" pricing, which is a cheaper rate but involves instances that may randomly get shut down. This is OK, though - I handle instances dying and coming back later. So, if we look at spot pricing, instead of $2.10/hr, it's around $0.35/hr. That's a huge savings!

Let's look at the price and performance in a more meaningful manner: Cost to Crack.

I've calculated out the cost to crack NTLM length 8 (full US charset). And, we can spend anywhere from just under $10000 down to under $200. The EC2 GPU spot instances are by far the cheapest option, and under $200 to crack NTLM length 8 means that, yes, they are slow, but they're still cost effective!

Unfortunately, they are still blown out of the water by a single AMD 6990 (the current leader card). The AMD GPUs include some hardware operations that dramatically speed up our current hash functions, and it shows.

Next, I'd like to talk about Rainbow Tables. They're not dead yet!

I'm sure most of you have heard that rainbow tables are useless, because they're good against unsalted hashes only. And, as we know, absolutely everyone out there salts their hashes! Well, except for Microsoft NTLM hashes. And a few websites that use SHA1 or raw MD5. There've only been a few dozen of those this year so far.

The other thing is that even with salted hashes, if the salt is known ahead of time, rainbow tables can be built for it. If someone were silly enough to salt with the username, you could build tables for that user. Perhaps, "Administrator."

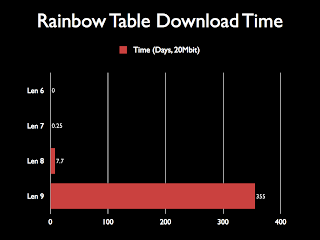

The problem is that rainbow tables are getting bigger. We clearly see the increasing size of tables as the length goes up - but this is a log scale graph.

Looking at a linear scale graph, it's obvious that the length 9 tables would be huge. Roughly 60TB for everything. This is big. Not only do people not usually keep this sort of storage around, it would take forever to download.

Specifically, almost a year. A week at 20Mbit is perhaps acceptable, but a year? Not by any reasonable metric is that sane.

Is that it? Are rainbow tables stuck forever at length 8 with a week+ to download them if the torrent is very well seeded (they're usually not)?

If they were, I wouldn't be talking about them. Fortunately, I've invented a solution.

My solution is called WebTables. It's a technology that lets you use tables without having to download them first.

The process starts with the target hashes. They are processed through the GPU to generate the derived candidate hashes. It's very difficult to go back from the candidate hashes to the target hashes - harder than going back to the original plains, and there's not a 1:1 mapping.

These candidate hashes are sent over the network to "The Cloud" - which is a server somewhere. This server can contain things such as very fast IO devices to make table searching incredibly fast.

The chains to regenerate are then passed back over the network, and are regenerated on the compute device, finding the passwords.

It works with CUDA, and OpenCL - so you can use anything in your system, CPUs included.

And, unlike a lot of other promised "cloud tech," this stuff works today. Go play with it.

The key here is that anything involving the target hashes is in your control. I never see the target hashes, and you never send them.

Those who really know rainbow tables may ask, "But what about the target hashes being the last candidate hash for each target?" Simple - I skip them by default. I also skip the previous hash. In exchange for a roughly 0.01% drop in hit rate, you end up not telling me anything useful. Also, the chains are sorted by hash value before being sent.

So, in summary:

WebTables is available to play with today. Go take a look.

It is instant access to tables - no downloading needed.

It is faster than most local spinning disks.

And you don't need the local storage space available - you can use huge tables without needing to be able to store them.

Finally, I'd like to talk about defenses. If you're reading this, you probably already know most of what I'm about to say.

You MUST salt your password hashes! It's critical.

And, more importantly, salts must be fully random. Giving an attacker preknowledge about the salt (such as a standard admin username) can let them build attacks against it. Also, the salt must be large. The attacker is only slowed by a factor of the total number of unique salts.

Lastly, iterate your hashes. Even a small iteration count hurts the attacker a lot more than it hurts you.

I'd like to close with a few thoughts.

Whatever you do, strive to be excellent at it. Those of you in college, focus on learning something and getting world class at it. Those who are amazing at what they do are never hunting for work.

Get involved in open source. I'm obviously biased and think my project is great, but get involved. You learn to integrate with bigger projects that are well beyond the "toy" stage that most college projects are. Also, no matter who you are, you can talk about open source projects. There's no "I can't tell you what I did" aspect of them. For interviews, I can talk about my work on Cryptohaze freely - and that's a good thing.

It takes a long time to learn something at the "really good" level. It can easily take 5-10 years to fully understand a moving tech - and that's OK.

Salt your hashes. Seriously.

And submit the "worst of the worst," those who store plaintext, to the plaintextoffenders site.

I'm easy to get in touch with. Enjoy!

I love doing big projects that involves using of open source. Teachers should expose their students into this as soon as they can.

ReplyDelete-Mr. Pittman

This type of message always inspiring and I prefer to read quality content, so happy to find good place to many here in the post, the writing is just great, thanks for the post. People Care HR

ReplyDelete